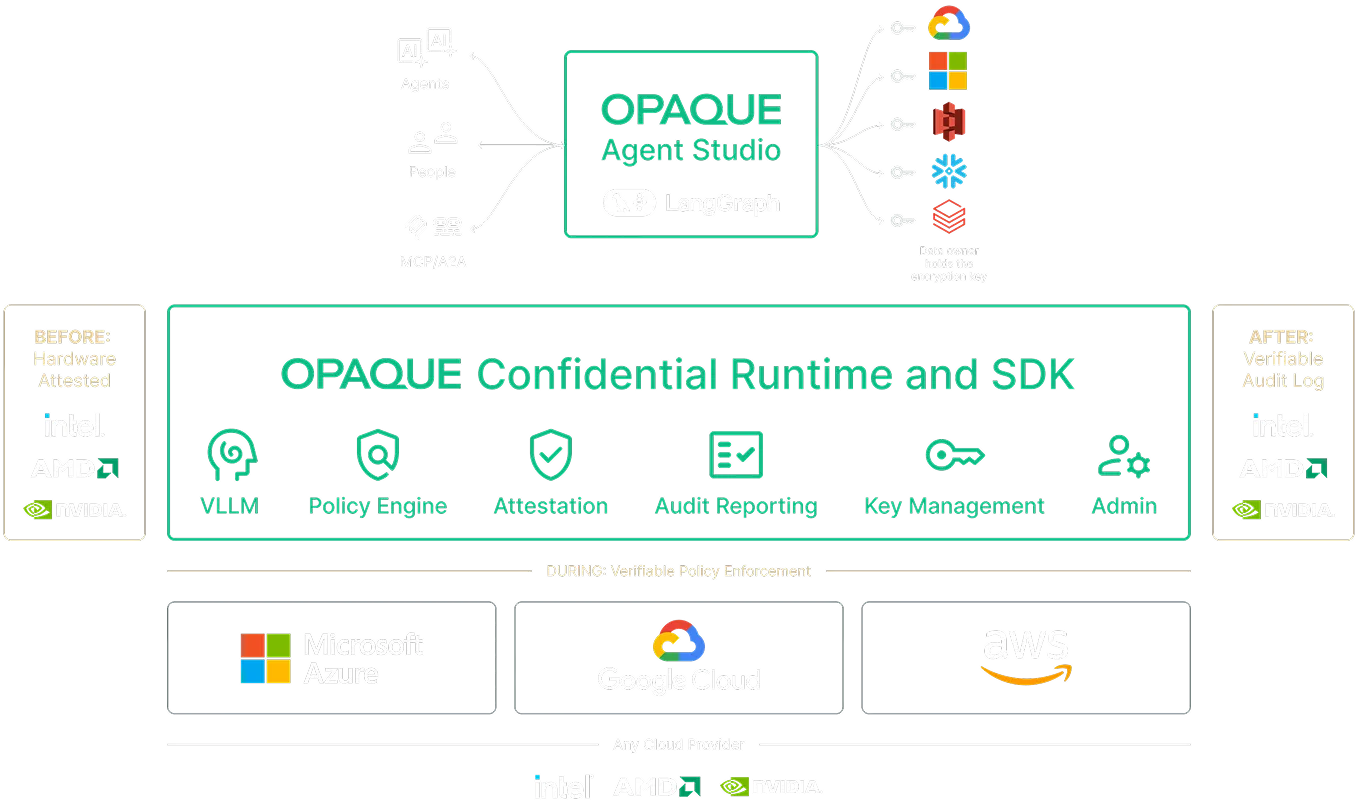

View Architecture Overview

Run AI workloads on your most sensitive data with verifiable privacy and governance.

OPAQUE runs your agentic AI workloads on sensitive data inside hardware-secured environments, keeping data encrypted during execution and continuously verifying that your approved policies are enforced.

VALUE

How Enterprises Get Value from AI with OPAQUE

Unlock sensitive data for AI

Prove—before and after execution—that regulated, proprietary, or high-risk data stays private from unauthorized users, applications, and cloud providers, so data owners and InfoSec teams can confidently approve AI workloads for production.

Define and enforce policies across the AI lifecycle

Control which data, models, agents, and tools can be used—at build time, deploy time, and runtime—and have those policies enforced automatically at every step of an AI workflow.

Secure AI workloads at runtime, not just on paper

Authenticate AI workloads, restrict what they can communicate with, and enforce infrastructure and networking boundaries to reduce runtime security risk and prevent data leakage.

Generate verifiable proof—not assumptions

Produce cryptographically verifiable artifacts showing what ran, where it ran, and which policies were enforced—giving CISOs, auditors, and regulators audit-ready evidence on demand.

Govern AI consistently across teams and clouds

Use OPAQUE as a common trust layer to apply enterprise-wide AI policies across clouds, business units, and agent frameworks—without rebuilding controls or duplicating compliance work.

WHO IT’S FOR

The OPAQUE Platform

OPAQUE is a Confidential AI platform that runs inside your cloud and provides verifiable runtime guarantees for data privacy and policy enforcement for all your AI agents and workflows. Teams can use OPAQUE’s Agent Studio, or deploy their containerized AI workloads directly using OPAQUE’s Confidential Runtime & SDK.

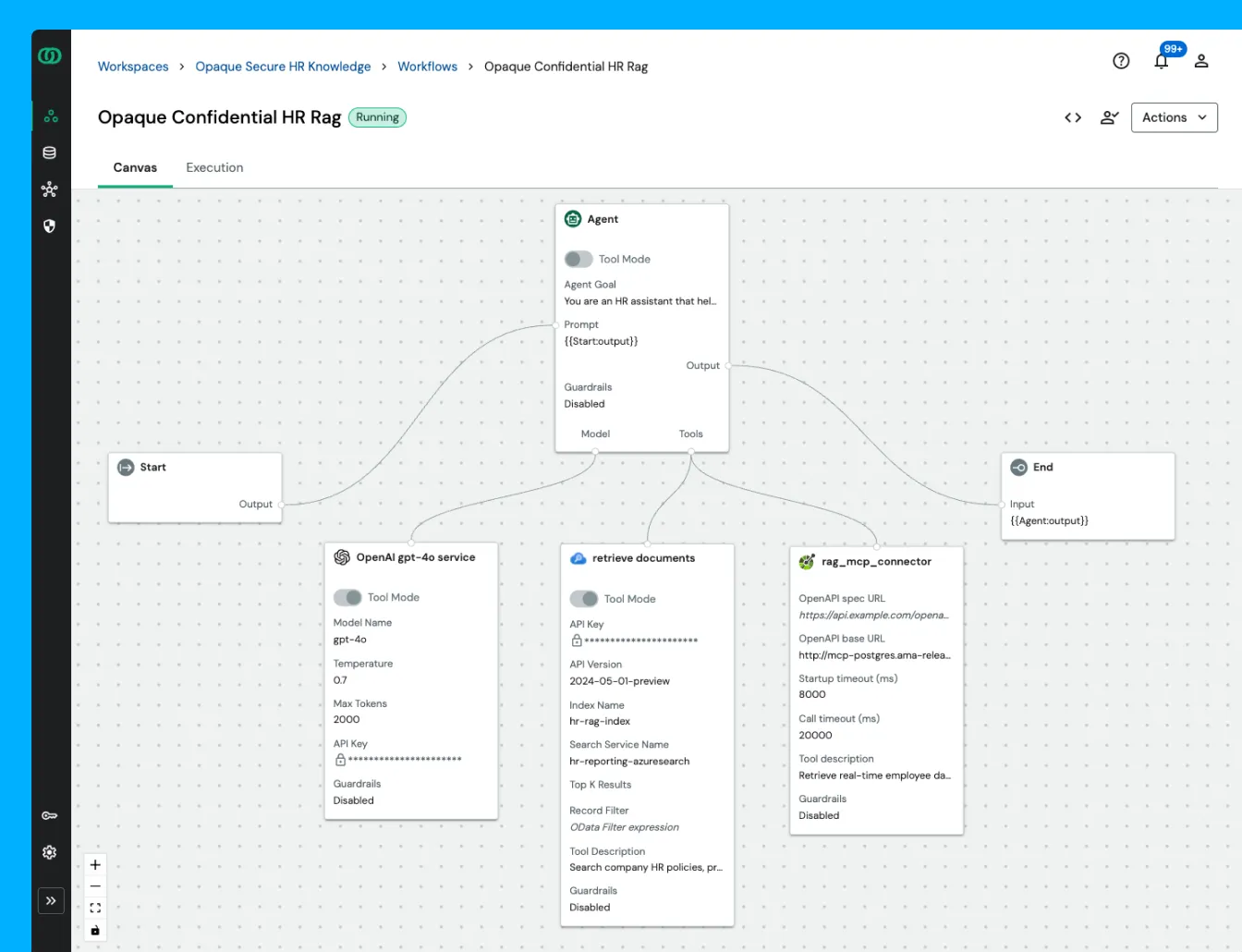

OPAQUE AI Agent Studio

A governed environment where your teams can design, test, and deploy AI workflows over sensitive data:

- Design confidential RAG pipelines and agentic workflows using a visual canvas

- Bring your own workloads as containers from other AI frameworks

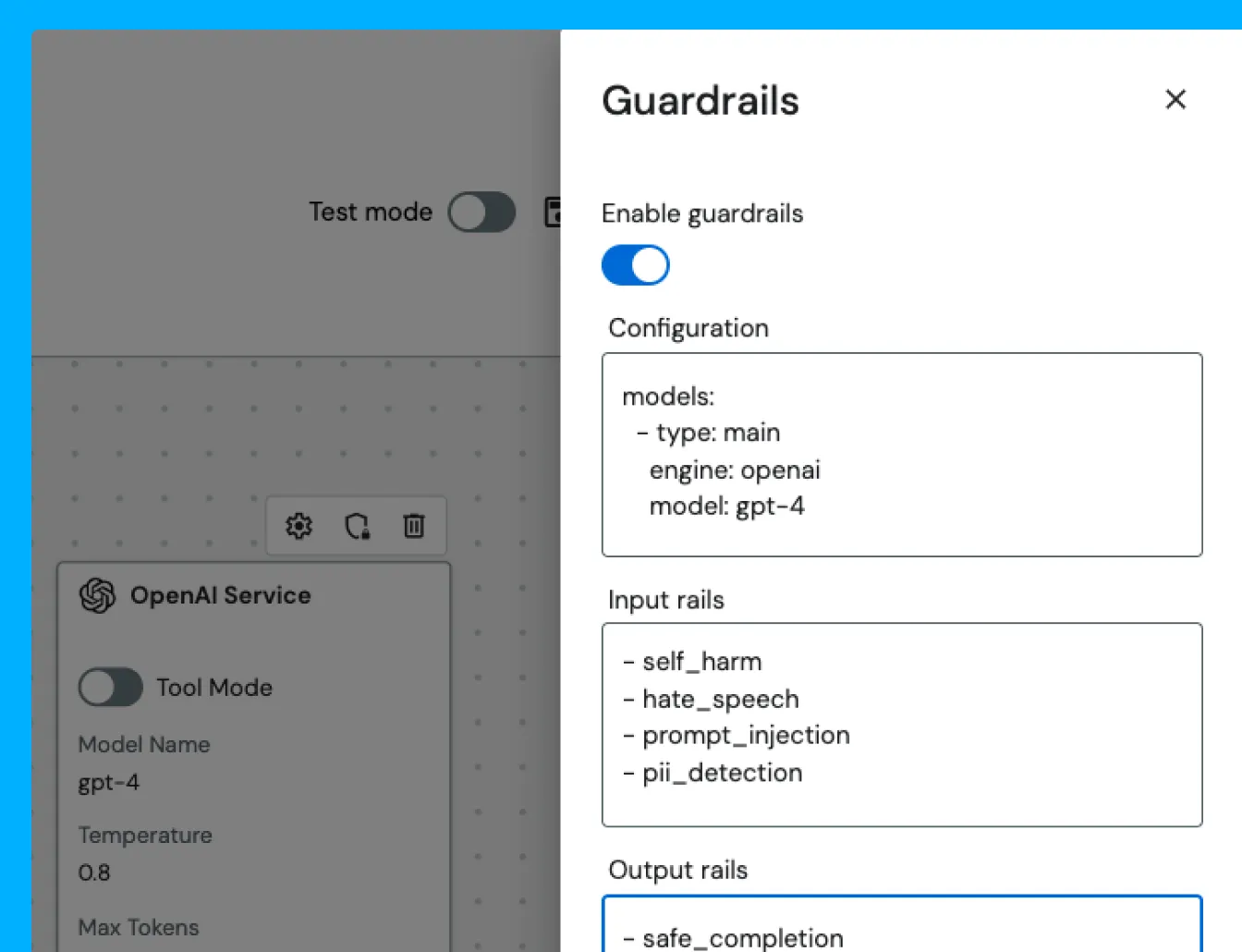

- Attach policies and guardrails to each workflow

- View execution results, policy enforcement outcomes, and audit evidence in one place

OPAQUE Confidential Runtime & SDK

A confidential execution layer that your teams and platforms can call directly:

- Runs inside confidential computing infrastructure in your cloud environment

- Keeps data encrypted in memory during processing

- Enforces policies and guardrails during runtime

- Produces hardware-signed attestation and audit artifacts, exportable for audit or independent verification before, during and after execution of workloads.

HOW IT WORKS

How OPAQUE Works

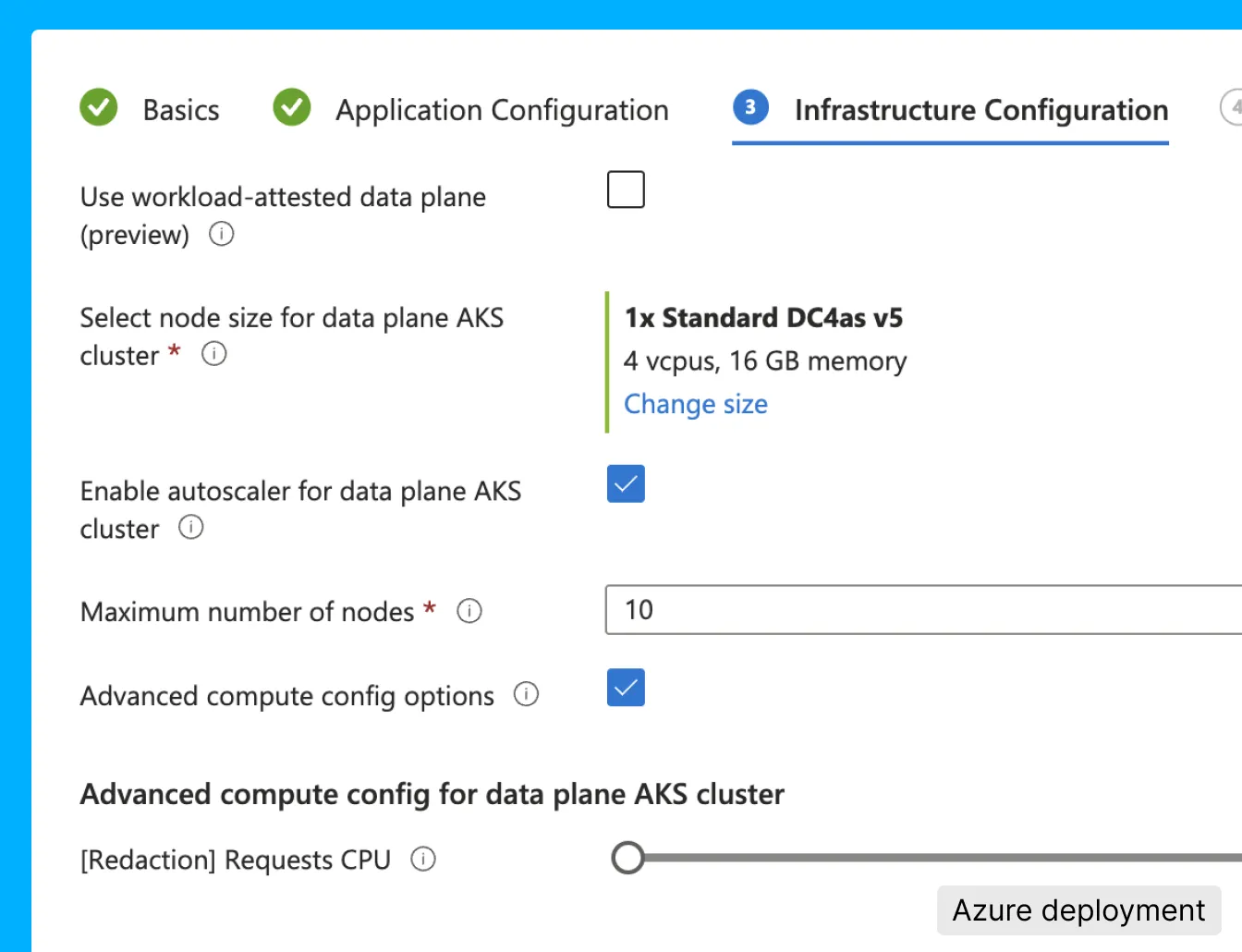

Deploy In Your Cloud

OPAQUE is deployed into your cloud environment within confidential computing–enabled infrastructure:

- Installed into Kubernetes clusters backed by Confidential Virtual Machines (CVMs).

- Supports clusters with confidential GPUs (cGPUs) for accelerated inference and agent execution.

- Requires no data migration or replication outside your environment.

Author or Import AI Workloads

Deploy AI workloads in one of two ways:

- The OPAQUE Studio: Deploy LLMs, design RAG pipelines or agentic workflows via visual canvas. Configure data access, models, tools, and execution parameters.

- Import containerized workloads: Bring existing AI workloads or custom services as signed OCI container images and deploy them directly onto the OPAQUE Confidential Runtime.

Policy Enforcement

OPAQUE policies define and enforce what workloads are allowed to do at runtime, scoped at multiple levels:

- Data-level policies: Control which data sources can be accessed by specific workflows.

- Workflow-level policies: Constrain AI workflow and agent behavior, including allowed models, tools, endpoints, and AI guardrails.

- Container-level policies: Restrict runtime behavior and apply container manifests.

Execute with Enforced Guarantees

Deployed workloads are executed only if attestation succeeds:

- Workloads are deployed as containers inside a confidential Kubernetes environment, keeping data encrypted at runtime – in memory during processing.

- Secure key release ensures data sources can be accessed only by verified workloads running in trusted environments.

- Communication between platform components are performed over attested TLS (aTLS) connections – binding encrypted communication to verified hardware, runtime, and workload identity.

All defined policies (data policies, AI workflow policies, and container policies), are enforced inline during execution.

Export Verifiable Evidence and Audit Reports

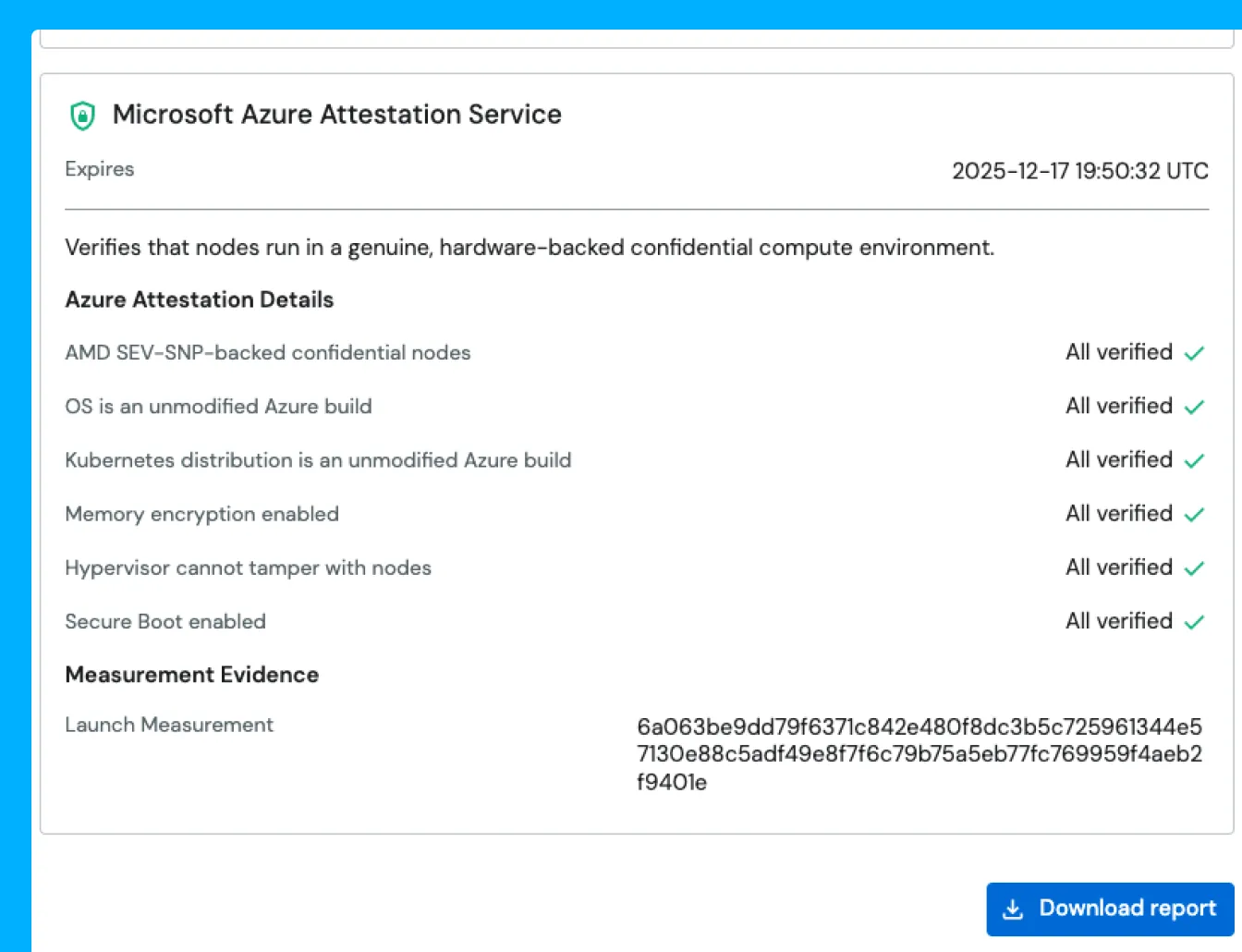

OPAQUE produces hardware-signed attestation reports and immutable audit records, exportable via APIs or OpenTelemetry compatible endpoints, and independently verifiable using the SDK (or open source tools).

- Attestation reports provide verifiable (measurements) for the integrity of the computing stack: confidential computing hardware (CPUs and GPUs); infrastructure (confidential VMs and Kubernetes environment); and all OPAQUE platform binaries, container images, and configurations.

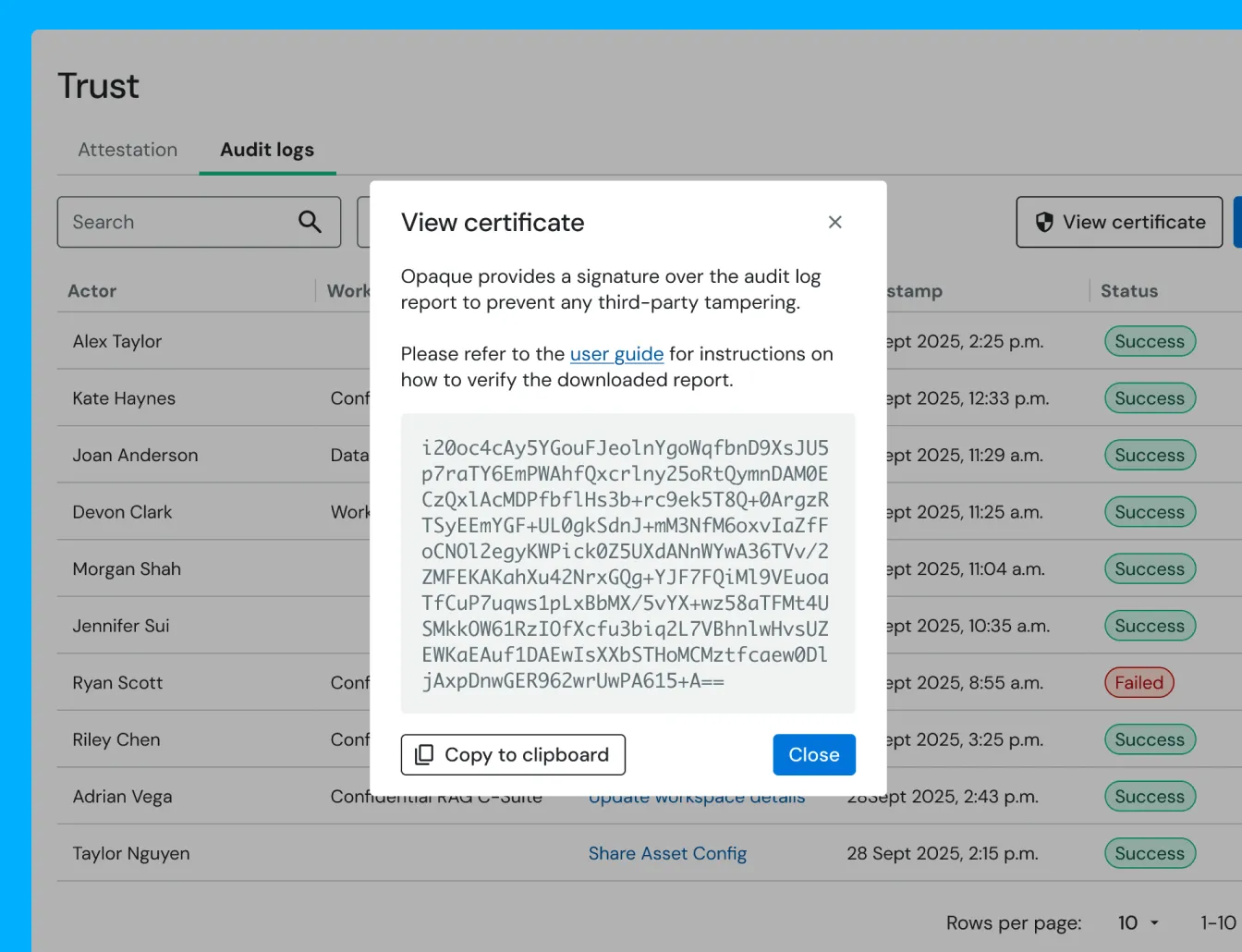

- Audit trails capture execution-level metadata: a trace of execution events; workload identity and configuration; and execution timestamps, status, and outcomes. The audit reports are cryptographically signed by the (attested) platform and are tamper-evident.

GET STARTED