The Next Evolution of AI Governance Closes the Runtime Gap

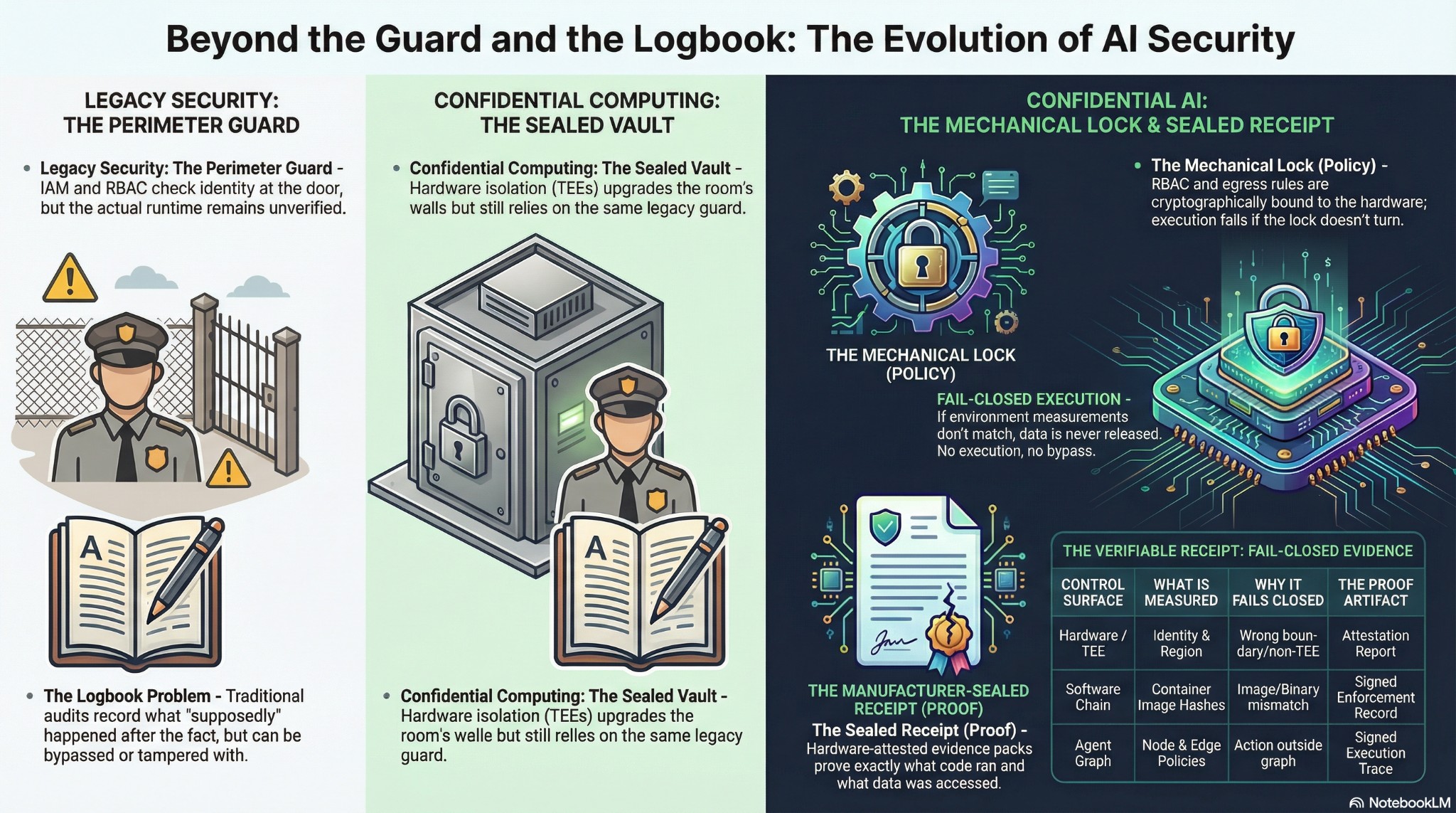

The way most enterprises govern AI is a guard and a logbook.

IAM checks identity at the door. RBAC decides who can access what. Data residency policies specify where data should stay. Privacy frameworks define what should happen. Guardrails tell the AI what it shouldn’t do.

Then a logbook—written by the same system you’re trying to audit—records what supposedly happened after.

Between policy configuration and audit review, nothing is verified at runtime. The guard isn’t in the room during execution. That’s the gap where governance fails.

When agents operate at machine speed—chaining tool calls, retrieving data, crossing jurisdictional boundaries autonomously—every action is an authenticated, authorized request. Your data residency policy says patient records stay in-region. Your agent just made six API calls across three services in 400 milliseconds. Did the data stay where your policy says it should?You’ll find out in the next audit. Maybe.

AI governance, privacy, and data sovereignty commitments are largely unenforceable today. They’re procedural. They depend on software behaving as configured, operators not making mistakes, and logs faithfully capturing what happened. At human speed, manageable. At agent speed, a liability.

Confidential computing improved the room. Hardware-backed Trusted Execution Environments seal the environment so no cloud provider, operator, or neighboring workload can access your data. Real sovereignty improvement. But inside that sealed environment, governance is still the guard and the logbook. Policies configured before. Logs reviewed after.

You know where the data is. You can’t prove what happened to it.Confidential AI changes the mechanism.

Data residency, purpose limitation, consent enforcement, cross-border transfer restrictions—all cryptographically bound to the runtime. The policy becomes the lock itself. Each tumbler clicks open only if the right conditions are met—before and during execution. Wrong sequence, the lock doesn’t turn. The operation doesn’t complete. Not flagged for review. Not logged for investigation. Blocked by the architecture.

Every operation produces a manufacturer-sealed receipt. A hardware-attested evidence pack proving what code ran, what policies governed execution, what data was accessed. Not the application’s self-reported logs. A record signed by the hardware manufacturer that any regulator, sovereign authority, or data protection officer can independently verify.

Three eras of AI governance: the guard who checks the policy, the vault that isolates the data, the lock whose tumblers are the policies—clicked open or not—before and during runtime, with a sealed receipt to prove it.

Contact us for a threat matrix showing you where you’re leaking data in your AI workloads.