OPAQUE Confidential Agents for Retrieval-Augmented Generation (RAG)

Unlock the power of AI without compromising privacy —

built on OPAQUE’s industry-leading confidential computing architecture.

If AI is central to your future, don’t build on borrowed trust. OPAQUE provides a platform where your Confidential Agents reason, act, and adapt—securely.

With hardware-enforced confidentiality, enterprise-grade governance, and encrypted data handling, OPAQUE ensures your agentic workflows are secure, compliant, and built for scale.

Why OPAQUE Confidential Agents for RAG Are Different

Most agentic frameworks stop at orchestration.

OPAQUE redefines the standard.

- Confidential: Every agent action runs inside hardware-backed Trusted Execution Environments (TEEs), ensuring data remains encrypted—even in use.

- Verifiable: Gain cryptographic guarantees and tamper-proof audit logs. Don’t just assume security—prove it.

- Scalable: From healthcare and finance to proprietary models—Opaque scales across highly regulated, high-risk environments.

With OPAQUE, every decision an agent makes is secured, governed, and traceable.

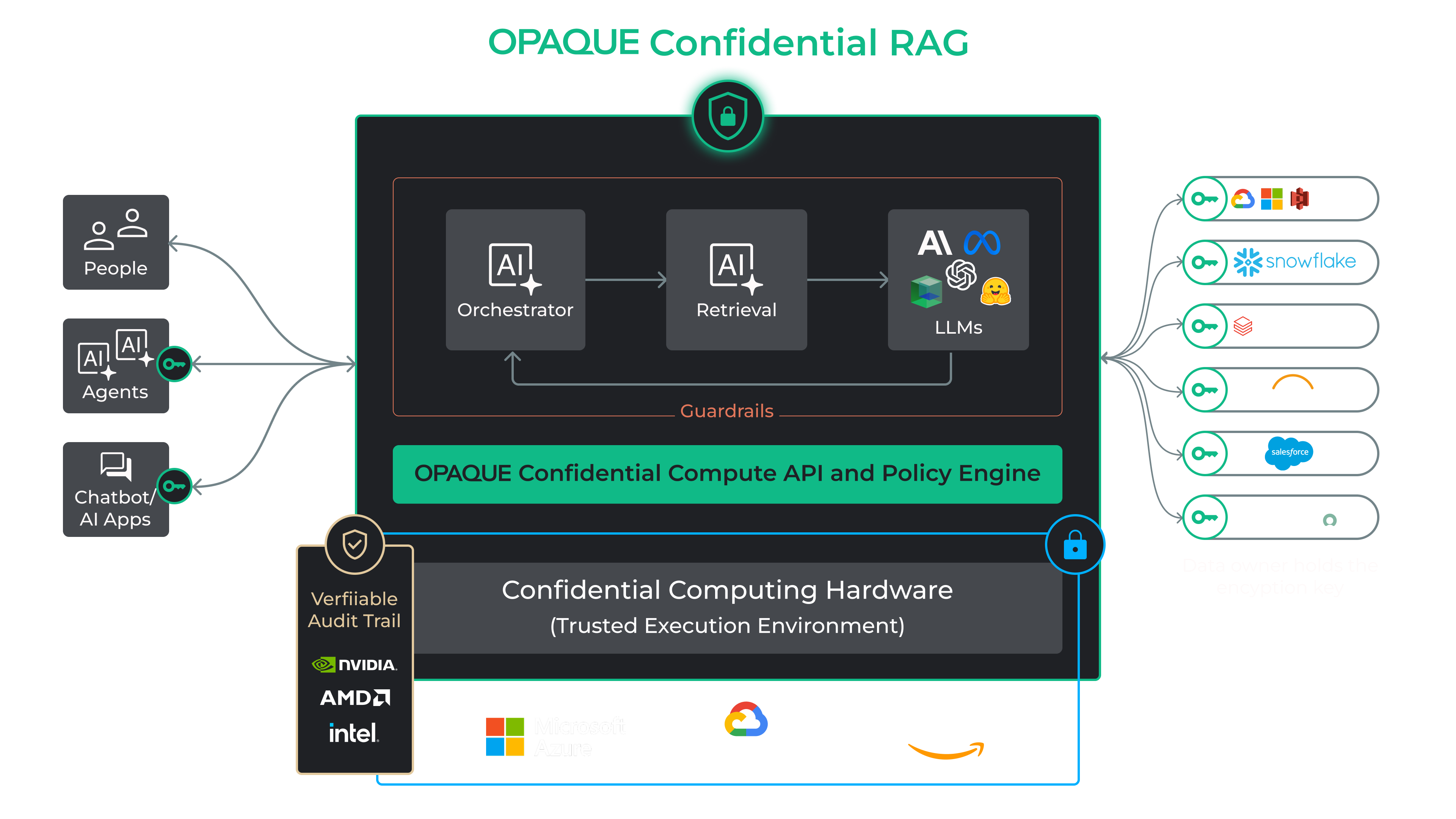

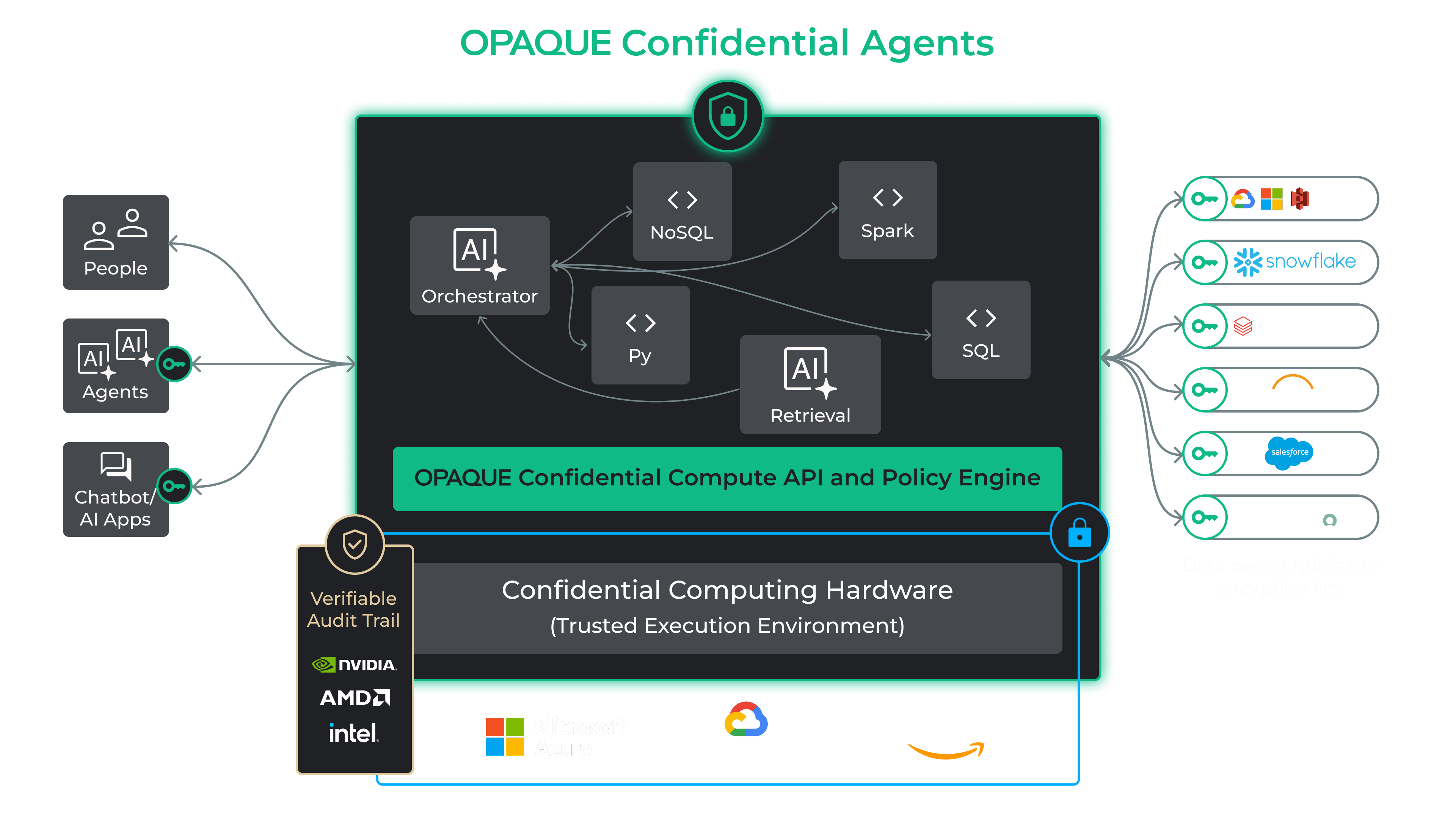

How OPAQUE Confidential Agents Work

Building secure, intelligent workflows should not require stitching together tools or sacrificing control. With OPAQUE, it doesn’t.

- Design Your Agentic Workflow

Start with our intuitive no-code builder to define workflows programmatically. Configure agents to retrieve data, reason it, make decisions, and act—all within a governed, visual environment. - Bring Your Own LLMs and Data

Plug in the language models of your choice—whether open-source or proprietary—and connect to structured and unstructured data sources across your cloud or on-prem environments. OPAQUE enables agents to retrieve and act on the data you trust, while keeping it encrypted and governed throughout. - Define Agent Roles and Collaboration

Assign specialized agents to handle tasks. - Enforce Policies and Guardrails from the Start

Built-in policy controls ensure sensitive data is handled appropriately from the moment it enters the workflow. - Run Securely in Confidential Environments

Every agent executes inside hardware-backed Trusted Execution Environments (TEEs), so your data stays private throughout processing—not just in storage or transit. - Monitor, Audit, and Iterate

Gain full visibility into agent behavior with audit logs and real-time observability. Track how decisions were made, verify outcomes, and adjust your workflow with confidence as your needs evolve.

Key Features of Confidential Agents for RAG

Confidential Agents for RAG goes beyond secure retrieval—they drive real-time, trustworthy AI decision-making

with enterprise-grade controls.

- Confidential RAG: Keep sensitive data encrypted throughout its lifecycle—from retrieval to inference. Enable selective PII redaction when external LLMs are involved to ensure privacy and compliance.

- Dynamic Compliance Enforcement: Guardrails and RBAC are enforced continuously across agent actions—not just configured statically.

- Real-Time Observability: Track prompts, decisions, and outputs through detailed audit logs—supporting compliance and root-cause analysis.

- Multi-Agent Collaboration: Enable agents to escalate, delegate, and adapt across workflows—autonomously and securely.

- Governed AI at Scale: Start with static pipelines, grow into adaptive systems with explainable decision paths and self-verifying compliance.

OPAQUE empowers enterprises to operationalize AI with the trust, speed, and control required to run mission-critical systems—now and into the future.

The Complete Picture:

Traditional vs. Confidential Agents for RAG

with OPAQUE

Frequently Asked Questions

Confidential RAG is a next-generation approach to Retrieval-Augmented Generation designed for sensitive data. Unlike standard RAG, which retrieves and processes data in plaintext (putting it at risk by exposing it outside the boundaries of the source systems), Confidential RAG maintains data protection throughout the workflow, providing verifiable guarantees of privacy, policy enforcement and auditability. All operations are executed within attested environments, with cryptographic controls that ensure data remains secure from infrastructure providers, admins, and unauthorized agents.

Guardrails are runtime policies that ensure LLM applications behave safely, ethically, and in compliance with enterprise or regulatory standards.

In the OPAQUE platform, guardrails are verifiably enforced inside trusted execution environments (TEEs) and applied step-by-step in agentic workflows—ensuring every agent action is governed, logged, and verifiable. OPAQUE supports the NVIDIA NeMo Guardrails framework as a baseline, enabling users to define rules using a flexible policy language and enforce them across inputs, outputs, and tool use in real time.

Yes. The guardrail system supports both declarative policy rules and user-defined logic.

Policies are written using Colang, a human-readable, declarative policy language developed by NVIDIA and utilized in their NeMo Guardrails framework. Colang simplifies the definition of safe, structured AI behaviors—such as blocking sensitive topics, restricting tool use, or requiring fallback responses—without the need for low-level programming.

OPAQUE prevents prompt injection, data leakage, and misuse through a layered security model—combining confidential computing, policy enforcement, and verifiable auditing.

All RAG queries are executed inside trusted execution environments (TEEs), where sensitive data stays encrypted and inaccessible to the model, cloud provider, or infrastructure admins. Within this environment, user prompts are parsed, filtered, and evaluated against guardrails—declarative and user-defined policies that block unsafe inputs, restrict tool access, and enforce compliance. The TEE ensures that all policies (on data as well as agent behavior) are verifiably enforced. Further, all agent actions are recorded in an immutable, tamper-proof audit log, enabling verification and accountability. This architecture ensures that every agentic workflow operates safely, predictably, and provably—without exposing sensitive data or allowing unauthorized behavior.

We currently support a variety of open-source LLMs, including LLaMa, Mistral, and Gemma models, with more to come soon. Customers can easily switch between third-party or confidential open-source LLMs, reducing vendor lock-in.

OPAQUE can serve LLMs directly inside its TEEs, ensuring sensitive data stays protected with verifiable privacy, runtime policy enforcement, and tamper-proof audit logs.

Even when using an external LLM, much of the sensitive data processing—such as ingestion, embedding generation, and retrieval—can still run within OPAQUE's TEE, preserving confidentiality and significantly reducing exposure.

When data must leave for external inference, it's encrypted in transit. To mitigate risk, OPAQUE includes built-in Redaction and Tokenization services that can sanitize sensitive fields before any external call.

Yes, we can support deploying into your private cloud, as long as it’s running within an Azure / GCP cloud service provider.

OPAQUE integrates natively into your existing AI stack—no rewrites, no re-platforming. Confidential agentic workflows, including data ingestion, retrieval, generation, and response, are exposed as standard REST APIs, so you can route requests directly without changing your codebase.

All processing occurs inside attested Trusted Execution Environments (TEEs) running on cloud infrastructure, such as Azure and GCP, ensuring that data remains protected—even during computation—and is inaccessible to cloud providers, administrators, or other workloads.

OPAQUE enforces data privacy through a hardware-attested confidential runtime that verifies the integrity of the runtime, agents, and data connections before any data access or processing begins. All processing is run within a TEE.

Access controls and governance policies—such as role-based restrictions—are defined at the data connection, runtime, and agentic workflow level and cryptographically bound into the runtime. This ensures policies are not just configured—they are enforced during execution, with tamper-proof, verifiable guarantees.

Guardrail policies can be specified on an agent-by-agent basis. As you design the workflow, and specify the interactions between two agents running in the same workflow, a guardrail policy can be applied to both the output of A and the input of B. We don’t support policy enforcement across agents running in different workflows, outside of the guardrail policies that can be applied to the agents executing inside Opaque’s platform.

OPAQUE creates a tamper‑proof audit trail showing which workflow ran, who ran it, and which tools the agents accessed, omitting sensitive payloads yet remaining fully cryptographically provable and verifiable with standard third‑party tools.

Organizations can define regulatory policies—such as data access constraints, usage rules, and data isolation—and bind them cryptographically into the runtime. These policies are enforced at execution, preventing any data exposure that would violate a regulation. Since policy enforcement is tied to cryptographic keys and attested hardware, no unauthorized access is possible—even from infrastructure or cloud providers.