From Proof of Concept to Proof of Value: The 5 Levels of Confidential AI Readiness

From Proof of Concept to Proof of Value: The 5 Levels of Confidential AI Readiness

AI pilots are everywhere. But real value? It’s elusive. In many organizations, “AI strategy” starts and stops with proof-of-concept slides, not business outcomes. Teams invest heavily, but often finish the quarter with a flashy demo, a handful of “AI-powered” tools in production, and a lingering sense that something’s missing.

According to Teresa Tung, Senior Managing Director at Accenture, presenting at the Confidential Computing Summit 2025, their deep dive into 2,000+ generative AI projects reveals that only 13% of enterprises actually see ROI from AI investments. The other 87%? Stuck in endless pilots and dead-end deployments—a rut with real consequences for risk, cost, and competitive edge.

How do you break out? It requires more than bigger budgets. You need to be brutally honest about where you are—and what it takes to move from excitement to evidence. That’s where the Confidential AI Maturity Framework comes in. Think of it as your roadmap out of the “pilot purgatory” and into sustainable, cross-functional, verifiable AI value.

It’s built on lessons from Accenture, OPAQUE, and the Confidential Computing Summit’s most pragmatic leaders, all facing the same question: Are you ready for AI agents that act at machine speed, in production, with trust you can prove? If you want to scale AI with confidence—not just hope—you’ll need to know what stage you’re really at.

This guide breaks down the five stages of enterprise Confidential AI readiness, with diagnostic questions, hard-won lessons, and a clear path to move up the ladder. By the end, you’ll be able to benchmark where you stand—and, more importantly, what it’ll take to join the 13% who are seeing impact.

Why Confidential AI Maturity Matters Now

Let's talk about why Confidential AI maturity matters—urgently.

The numbers provided by Teresa Tung, Senior Managing Director at Accenture, at the Confidential Computing Summit tell a clear story: 83% of leaders say they're excited about AI, but many projects hit a wall at "progressing." That means lots of energy, not much to show for it, and a whole lot of fragmented efforts that never escape their silo.

AI agents are already everywhere: CrewAI now clocks 60 million agents a month, while Microsoft's got 100,000+ confidential VMs humming away. But these wins have exposed just how quickly old-school controls and ad hoc "AI experiments" reach their limit.

At this scale, it's easy to lose track of who owns the data, what rules are being followed, and whether anyone can prove a result wasn't just wishful thinking. It's the foundation for making AI reliable enough to bet the business on.

If you want AI to move past small wins and endless pilots, you need trusted data, cross-functional guardrails, and controls that run automatically, all the time. What separates the companies scaling real results is simple: they can show how things work, prove compliance on demand, and point to business impact. The teams that move beyond the “trust us” stage are the ones that get to scale with confidence.

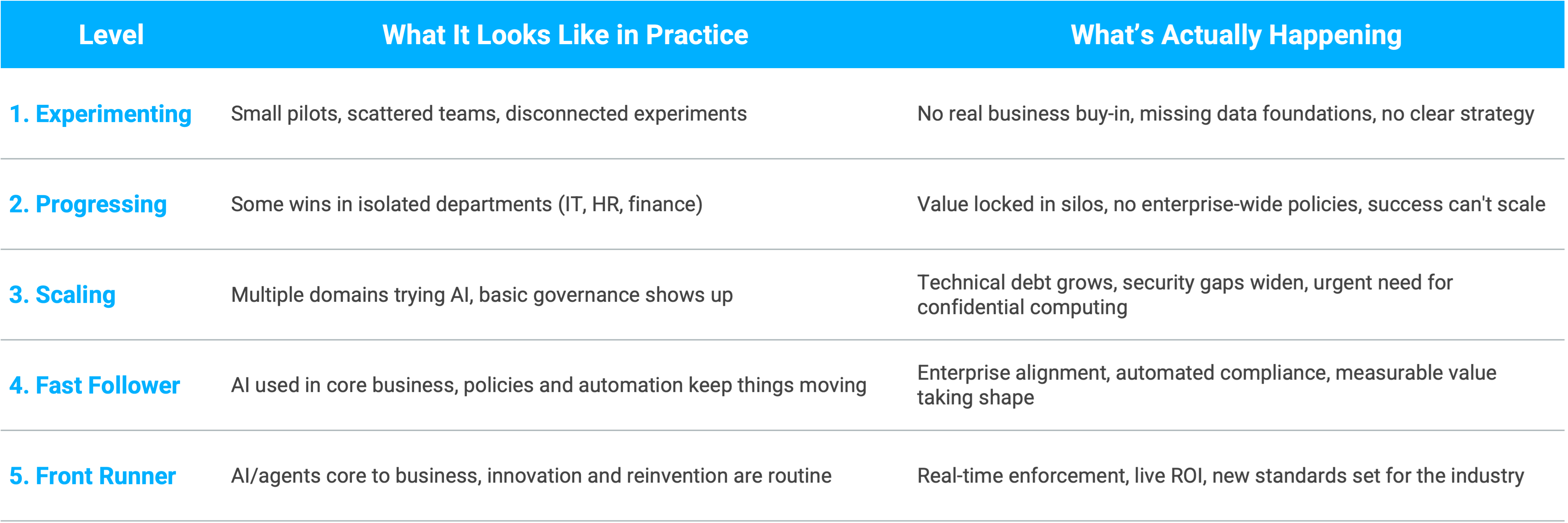

The 5 Levels of Confidential AI Readiness

Clear benchmarks put your current approach in context and make progress measurable. Use this maturity model to identify where your organization stands today and what it takes to advance from experiments to enterprise-scale, trusted AI impact.

Some companies overestimate where they land. If you’re not seeing measurable business impact—or can’t show the receipts when asked—you’re likely below Level 4. Let’s break down each stage.

1. Experimenting (Sandbox Mode)

Most organizations start here: small pilot projects, each run by different teams, rarely sharing knowledge or results. You might see a chatbot in HR, an AI-powered report in finance, or a data science “lab” playing with models on the side—each one disconnected from the rest of the business.

There’s excitement, but little coordination or oversight. Data is messy or incomplete, and the teams involved are often working off their own playbooks. No surprise: what happens in the sandbox stays in the sandbox. These projects look good on slides but rarely deliver outcomes that anyone outside the originating team notices.

To move out of this stage, you need to find pockets of good data, start building bridges between teams, and get leadership interested in more than just the next demo. Progress starts when experiments connect to real business priorities and can be shared, repeated, or scaled beyond their original sandbox.

2. Progressing (Isolated Use Cases)

This is when AI projects move beyond demos and finally hit production, but usually only in support domains like IT, HR, or finance. A department might automate invoice approvals or launch a customer chatbot; success is real, but these wins stay boxed in—isolated from the organization’s core business.

At this stage, it’s common to see data and value stuck in silos. Governance is light or inconsistent, and each use case operates with its own playbook. There’s still no enterprise-wide policy, so every shiny new AI tool has to find its own path.

To advance, organizations need to connect these isolated wins—building scalable governance, encouraging teams to share what works, and moving beyond one-off projects. Real progress starts when policy, data, and best practices spread throughout the business, setting up AI for broader, more reliable impact.

3. Scaling (Multiple Domains)

Here, you start to see AI projects popping up across several departments—maybe supply chain is piloting forecasting models, marketing is running campaign optimizations, and operations is dabbling in workflow automation. There’s some early governance now, with guidelines just starting to appear.

But spreading fast comes with side effects: technical debt builds up, and each team’s “quick win” exposes fresh security, compliance, or privacy risks. The need for confidential computing grows more obvious as sensitive data and complex workloads cross boundaries. Companies in this stage feel the limits of ad hoc oversight—small issues get bigger, and the patchwork approach doesn’t scale for long.

To move forward, you’ll need to invest in clear value frameworks, tighten up governance, and make sure controls aren’t just written down but enforced automatically. The teams that reach for robust, machine-driven policies (instead of heroic manual effort) are the first to make real, measurable ROI possible.

4. Fast Follower (Strategic Bets)

This is where AI becomes integral to core business lines—not just the side projects or back office tasks. Teams adopt automated policy enforcement, confidential infrastructure, and enterprise-wide standards. Now, new AI initiatives plug into central pipelines for procurement, CI/CD (automation pipelines that turn code into deployable products), and compliance, and results can be measured and reported with far less manual overhead.

Strategic cases drive expansion, not just curiosity or optimism. The organization is aligned—the business, security, and IT are working from the same playbook, and AI starts moving at the pace of real business needs.

Here’s how to stay in front: shorten the gap between experiment and ROI, and keep your people learning as fast as the tech evolves. This isn’t the time to coast. Fast followers keep finding new efficiencies, updating automation as standards tighten, and making real-time adjustments as business priorities shift.

5. Front Runner (Continuous Reinvention)

This is what it looks like when the training wheels come off. AI isn’t just powering a handful of projects—it’s at the core, driving how the business works, shifts, and grows. Agents and automation are everywhere: checking policy as they run, handling decisions on the fly, and pushing every part of the business to move faster.

Teams here will question every routine, ditch legacy shortcuts, and keep pressure on themselves to deliver proof instead of promises. ROI isn’t buried in spreadsheets—it’s out in the open, as clear as the new standards others are trying to catch up to. This is where the industry comes to peek under the hood.

Teams that lead at this level never sit still. Once something works, it’s on the chopping block for reinvention. If there’s a sharper play or a better way to share results, they’ll find it—and back it up with proof. Comfortable is not an option here.

Four Enablers and Lessons from the Summit

The Confidential Computing Summit 2025 made it clear what actually moves organizations up the maturity curve. These are the patterns and practices that kept showing up in the teams getting real results:

1. Multidisciplinary leadership shows up in the trenches

Transformation might start with the C-suite, but it lives or dies with cross-functional teams. Think of engineering, compliance, ops, and business leads working together on a live rollout—not just swapping emails. One Summit example: a global bank brought legal and IT together from day one for every AI deployment, cutting bottlenecks later.

2. Confidential AI becomes essential infrastructure

The Summit put it plainly: if your AI touches anything sensitive, "good enough" security doesn’t cut it. The teams that are getting real results are locking down every part of the AI chain—models, data, training runs, inference—not just the easy parts like storage or transit. Confidential AI keeps your IP and customer data out of someone else’s hands, even when the model is crunching it live.

The leading teams showed how to let models work on sensitive data—customer records, contracts, source code—without anyone being able to peek under the hood, whether they’re inside your stack or outside. The leaders at the Summit were frank: the companies seeing ROI are the ones that treat confidential AI like plumbing, not a feature—always on, often invisible, and absolutely non-negotiable when it matters.

3. Automated governance is built-in, not an afterthought

Instead of quarterly policy reviews, leaders bake controls into CI/CD pipelines and procurement so that anything not verifiable or attested never ships. At one panel, a SaaS company showed how they block unverified AI components automatically—no policy loopholes, no manual overrides.

4. Continuous upskilling keeps everyone moving

The strongest teams invest in training across engineering, business, and compliance, even pulling in frontline contributors. For example, one enterprise created “AI fluency sprints” for every department, so nobody had to guess what good governance or secure agent infrastructure looked like on-the-job.

These enablers were highlighted again and again as the moves that unlocked real progress. The biggest takeaway? The companies that combine technical discipline with habits of transparency and collaboration outpace those still relying on hope and slide decks.

How to Stress-Test Your AI Readiness

If you want a clear read on your own maturity, start with the basics. Here’s a checklist the best teams used to get honest about where they stood—and what needed work:

- Do you have a solid, trustworthy data foundation for AI projects?

- Are your AI workflows governed and fully auditable, with policy enforcement that actually runs automatically?

- Is the executive team pushing for real cross-functional upskilling around AI, not just training for one group?

- Do your vendors and supply chain partners meet attestation (proof that a workload or process hasn’t been tampered with) and transparency standards you can verify?

- Can you measure and report ROI on AI—and are those results driving ongoing improvement, not just collecting dust?

If you can answer yes to most of these, you’re ahead of the curve. If not, you’ve got a clear starting point for what to fix next.

Ready to Benchmark Your Maturity?

The “agentic era” will reward organizations that turn experimentation into verifiable, scalable business progress. Use OPAQUE’s interactive assessment tool to benchmark your maturity and surface the enablers and gaps holding you back.

The lesson from confidential AI’s most successful leaders is clear: Transformation comes not from technical bravado or marketing hype, but from disciplined, evidence-backed progression through the stages of readiness. Most enterprises are still at Level 1 or 2—leaving massive value on the table. The time to move decisively is now.

Ready to assess your Confidential AI readiness? Start your journey—move from optimism to proven ROI.

Visit Confidential Computing Summit 2025 for exclusive resources, expert insights, and tools from the premier AI infrastructure event of the year to move from pilot to real results.