Trusting AI with Your Enterprise Data: Solving the LLM Privacy Puzzle with Confidential AI

Trusting AI with Your Enterprise Data:

Solving the LLM Privacy Puzzle with Confidential AI

Enterprises are racing to unlock the potential of large language models (LLMs) through Retrieval-Augmented Generation (RAG), offering employees powerful, natural language access to proprietary data. But this promise brings a hard question: How do you guarantee data privacy when sensitive enterprise information flows into and through an LLM?

Despite encryption at rest, network segmentation, or even prompt filtering, traditional AI architectures often fail to provide end-to-end privacy assurance. Data is decrypted for processing. LLMs operate in exposed environments. There’s little visibility—let alone cryptographic proof—of how data is used.

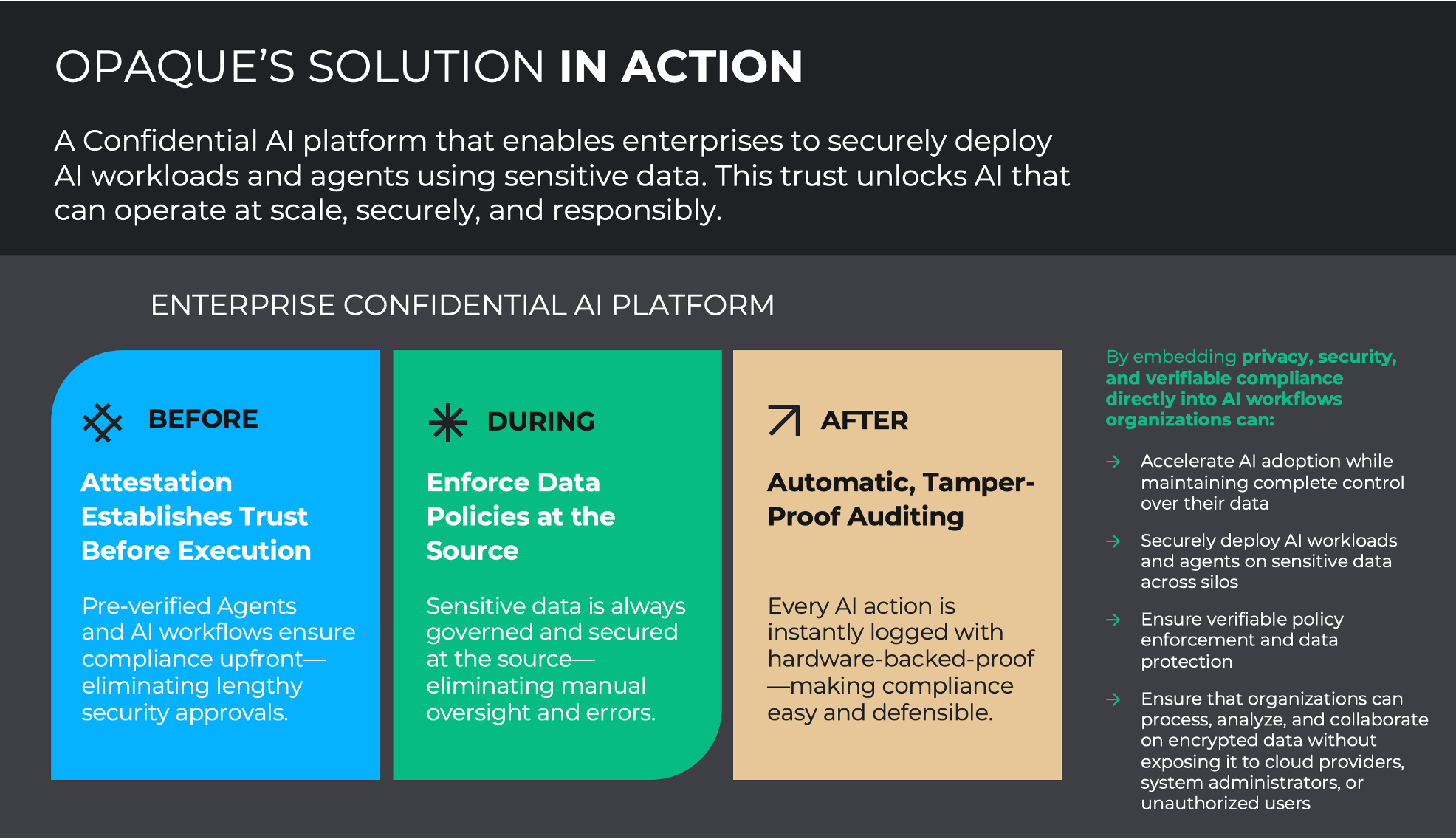

At Opaque, we believe privacy and AI don’t have to be at odds. In fact, we’ve built the Confidential AI Platform to make sure they work hand-in-hand. This post explores the privacy lifecycle of enterprise data in LLM workflows, and how Opaque delivers verifiable protections before, during, and after each interaction.

Before: Verifying Trust Before Any Data Moves

Most RAG architectures fail before a single token is generated. Why? Because the pipeline assumes trust in the LLM provider, the data connection, and the runtime environment—without cryptographic verification.

With Opaque, we flip the model: No data is exposed until trust is proven.

- Hardware-rooted attestation ensures the LLM environment is running inside a confidential enclave, on trusted CPUs or GPUs (e.g., Intel SGX or NVIDIA H100s with HBI).

- Policy validation ensures the LLM and its orchestration pipeline (e.g., agents, plugins, connectors) are only allowed to execute if approved governance policies are in place.

- Encrypted RAG source binding means even the knowledge base (vector store or database) stays encrypted until it’s read inside an enclave that passes attestation.

This pre-flight security posture is what distinguishes Confidential AI. It’s not just “encrypted at rest”—it’s provably isolated and controlled before data ever leaves its vault.

During: Enforcing Policy Through the Entire LLM Workflow

Once a prompt hits the LLM, the real complexity begins: multiple agents may be invoked, tools called, RAG queries run, and user data processed—all potentially in ways that violate privacy rules or compliance boundaries.

Opaque’s Confidential AI Platform enforces continuous, fine-grained policy controls:

- RAG data decryption only inside enclaves, with runtime enforcement of access rules: e.g., “HR data must not be accessed by customer support agents.”

- Governance on prompt inputs and tool outputs: define redaction, anonymization, or filtering rules on-the-fly, without trusting the LLM itself to behave.

- Agent interaction tracing ensures every action (tool call, function chain, memory access) is authorized by the approved data-use policy.

Because everything runs within a secure, attested enclave, even insider threats or rogue code can’t exfiltrate data or break the chain of custody.

After: Proving What Happened with Cryptographic Audit Trails

Privacy doesn’t end with a response. Organizations need to prove that sensitive data was protected and used responsibly—especially in regulated industries like healthcare, finance, and government.

Opaque delivers post-interaction accountability:

- Tamper-proof audit logs signed by the enclave’s hardware root of trust.

- End-to-end cryptographic evidence showing what data was accessed, how it was used, and which agents or models handled it.

- Policy verification results proving that the entire LLM pipeline acted in accordance with declared governance policies.

This after-the-fact auditability isn’t just helpful—it’s required for modern data compliance frameworks like ISO 27001, SOC 2, GDPR, and HIPAA.

The Confidential Advantage for Enterprise AI

Opaque’s Confidential AI Platform uniquely addresses the full data privacy lifecycle across LLM interactions:

Conclusion

RAG-powered LLMs are reshaping enterprise productivity. But with that power comes responsibility—especially when proprietary data, customer information, or regulated content is involved.

Opaque delivers a secure, end-to-end platform for AI workflows that protects sensitive data before, during, and after every LLM interaction. Confidential computing isn’t an add-on—it’s the foundation.

If you’re building private LLMs or want to unlock RAG without compromising data privacy, talk to us about deploying on the Opaque Confidential AI Platform.